At CES 2026, nearly every major technology company promised the same thing in different words: assistants that finally understand us. These systems were not just answering questions. They were booking reservations, managing homes, summarizing daily life, and acting on a user’s behalf. The message was unmistakable. Language models had moved beyond conversation and into agency.

Yet watching these demonstrations felt familiar in an uncomfortable way. I have seen this confidence before, often at moments when language systems appear fluent while remaining fragile underneath. CES 2026 did not convince me that machines now understand human language. Instead, it exposed how quickly our expectations have outpaced our theories of meaning.

When an assistant takes action, language stops being a surface interface. It becomes a proxy for intent, context, preference, and consequence. That shift raises the bar for computational linguistics in ways that polished demos rarely acknowledge.

From chatting to acting: why agents raise the bar

Traditional conversational systems can afford to be wrong in relatively harmless ways. A vague or incorrect answer is frustrating but contained. Agentic systems are different. When language triggers actions, misunderstandings propagate into the real world.

From a computational linguistics perspective, this changes the problem itself. Language is no longer mapped only to responses but to plans. Commands encode goals, constraints, and assumptions that are often implicit. A request like “handle this later” presupposes shared context, temporal reasoning, and an understanding of what “this” refers to. These are discourse problems, not engineering edge cases.

This distinction echoes long-standing insights in linguistics. Winograd’s classic examples showed that surface structure alone is insufficient for understanding even simple sentences once world knowledge and intention are involved (Winograd). Agentic assistants bring that challenge back, this time with real consequences attached.

Instruction decomposition is not understanding

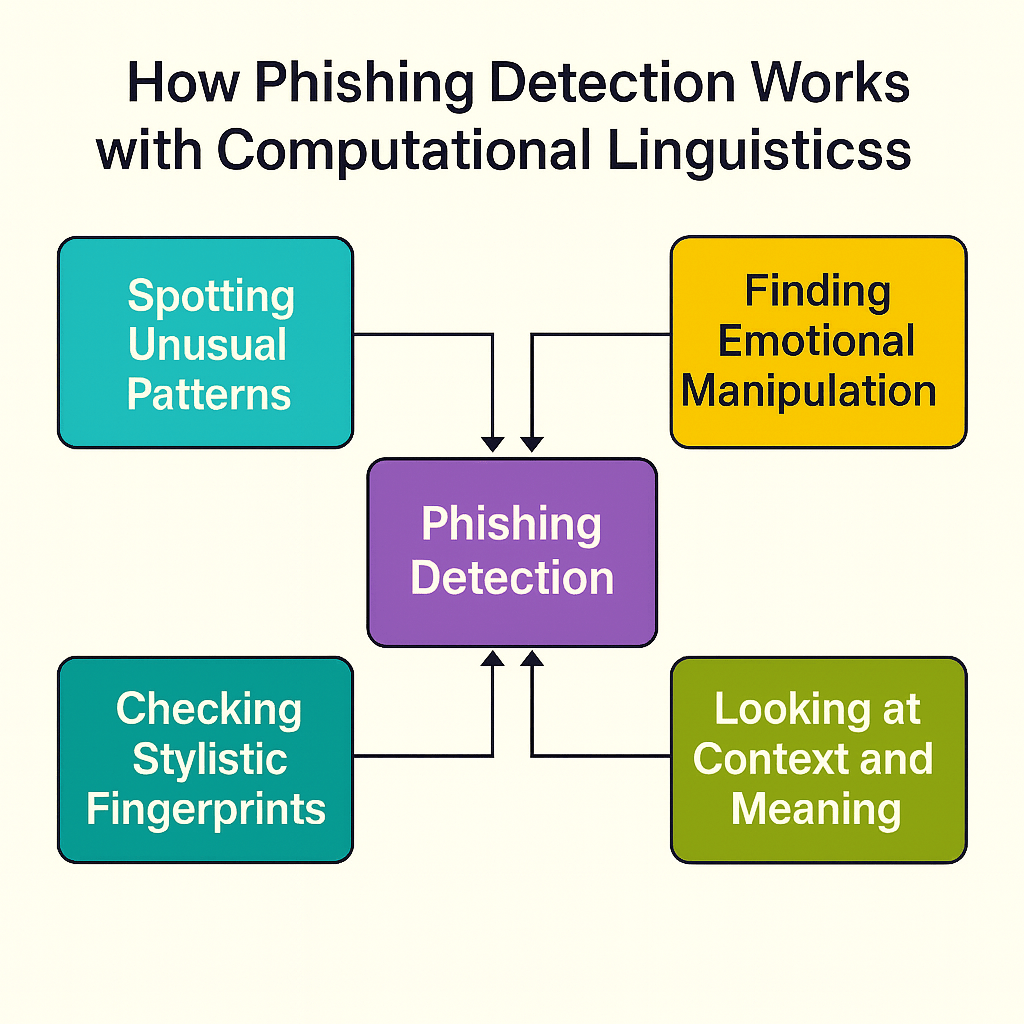

Many systems highlighted at CES rely on instruction decomposition. A user prompt is broken into smaller steps that are executed sequentially. While effective in constrained settings, this approach is often mistaken for genuine understanding.

Decomposition works best when goals are explicit and stable. Real users are neither. Goals evolve mid-interaction. Preferences conflict with past behavior. Instructions are underspecified. Linguistics has long studied these phenomena under pragmatics, where meaning depends on speaker intention, shared knowledge, and conversational norms (Grice).

Breaking an instruction into steps does not resolve ambiguity. It merely postpones it. Without a model of why a user said something, systems struggle to recover when their assumptions are wrong. Most agentic failures are not catastrophic. They are subtle misalignments that accumulate quietly.

Long-term memory is a discourse problem, not a storage problem

CES 2026 placed heavy emphasis on memory and personalization. Assistants now claim to remember preferences, habits, and prior conversations. The implicit assumption is that more memory leads to better understanding.

In linguistics, memory is not simple accumulation. It is interpretation. Discourse coherence depends on salience, relevance, and revision. Humans forget aggressively, reinterpret past statements, and update beliefs about one another constantly. Storing embeddings of prior interactions does not replicate this process.

Research in discourse representation theory shows that meaning emerges through structured updates to a shared model of the world, not through raw recall alone (Kamp and Reyle). Long-context language models still struggle with this distinction. They can retrieve earlier information but often fail to decide what should matter now.

Multimodality does not remove ambiguity

Many CES demonstrations leaned heavily on multimodal interfaces. Visuals, screens, and gestures were presented as solutions to linguistic ambiguity. In practice, ambiguity persists even when more modalities are added.

Classic problems such as deixis remain unresolved. A command like “put that there” still requires assumptions about attention, intention, and relevance. Visual input often increases the number of possible referents rather than narrowing them. More context does not automatically produce clearer meaning.

Research on multimodal grounding consistently shows that aligning language with perception is difficult precisely because human communication relies on shared assumptions rather than exhaustive specification (Clark). Agentic systems inherit this challenge rather than escaping it.

Evaluation is the quiet failure point

Perhaps the most concerning gap revealed by CES 2026 is evaluation. Success is typically defined as task completion. Did the system book the table? Did the lights turn on? These metrics ignore whether the system actually understood the user or simply arrived at the correct outcome by chance.

Computational linguistics has repeatedly warned against narrow benchmarks that mask shallow competence. Metrics such as BLEU reward surface similarity while missing semantic failure (Papineni et al.). Agentic systems risk repeating this mistake at a higher level.

A system that completes a task while violating user intent is not truly successful. Meaningful evaluation must account for repair behavior, user satisfaction, and long-term trust. These are linguistic and social dimensions, not merely engineering ones.

CES as a mirror for the field

CES 2026 showcased ambition, not resolution. Agentic assistants highlight how far language technology has progressed, but they also expose unresolved questions at the heart of computational linguistics. Fluency is not understanding. Memory is not interpretation. Action is not comprehension.

If agentic AI is the future, then advances will depend less on making models larger and more on how deeply we understand language, context, and human intent.

References

Clark, Herbert H. Using Language. Cambridge University Press, 1996.

Grice, H. P. “Logic and Conversation.” Syntax and Semantics, vol. 3, edited by Peter Cole and Jerry L. Morgan, Academic Press, 1975, pp. 41–58.

Kamp, Hans, and Uwe Reyle. From Discourse to Logic. Springer, 1993.

Papineni, Kishore, et al. “BLEU: A Method for Automatic Evaluation of Machine Translation.” Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, 2002, pp. 311–318.

Winograd, Terry. “Understanding Natural Language.” Cognitive Psychology, vol. 3, no. 1, 1972, pp. 1–191.

— Andrew