I’m writing this post about a recent discovery by GPTZero, reported by Shmatko et al. 2026. The finding sparked significant discussion across the research community (Goldman 2026). While hallucinations produced by large language models have been widely acknowledged, far less attention has been paid to hallucinations in citations. Even reviewers at top conferences such as NeurIPS failed to catch citation hallucination issues, showing how easily these errors can slip through existing academic safeguards.

For students and early-career researchers, this discovery should serve as a warning. AI tools can meaningfully improve research efficiency, especially during early-stage tasks like brainstorming, summarizing papers, or organizing a literature review. At the same time, these tools introduce new risks when they are treated as sources rather than assistants. Citation accuracy remains the responsibility of the researcher, not the model.

As a junior researcher, I have used AI tools such as ChatGPT to help with literature reviews in my own work. In practice, AI can make the initial stages of research much easier by surfacing themes, suggesting keywords, or summarizing large volumes of text. However, I have also seen how easily this convenience can introduce errors. Citation hallucinations are particularly dangerous because they often look plausible. A reference may appear to have a reasonable title, realistic authors, and a convincing venue, even though it does not actually exist. Unless each citation is verified, these errors can quietly make their way into drafts.

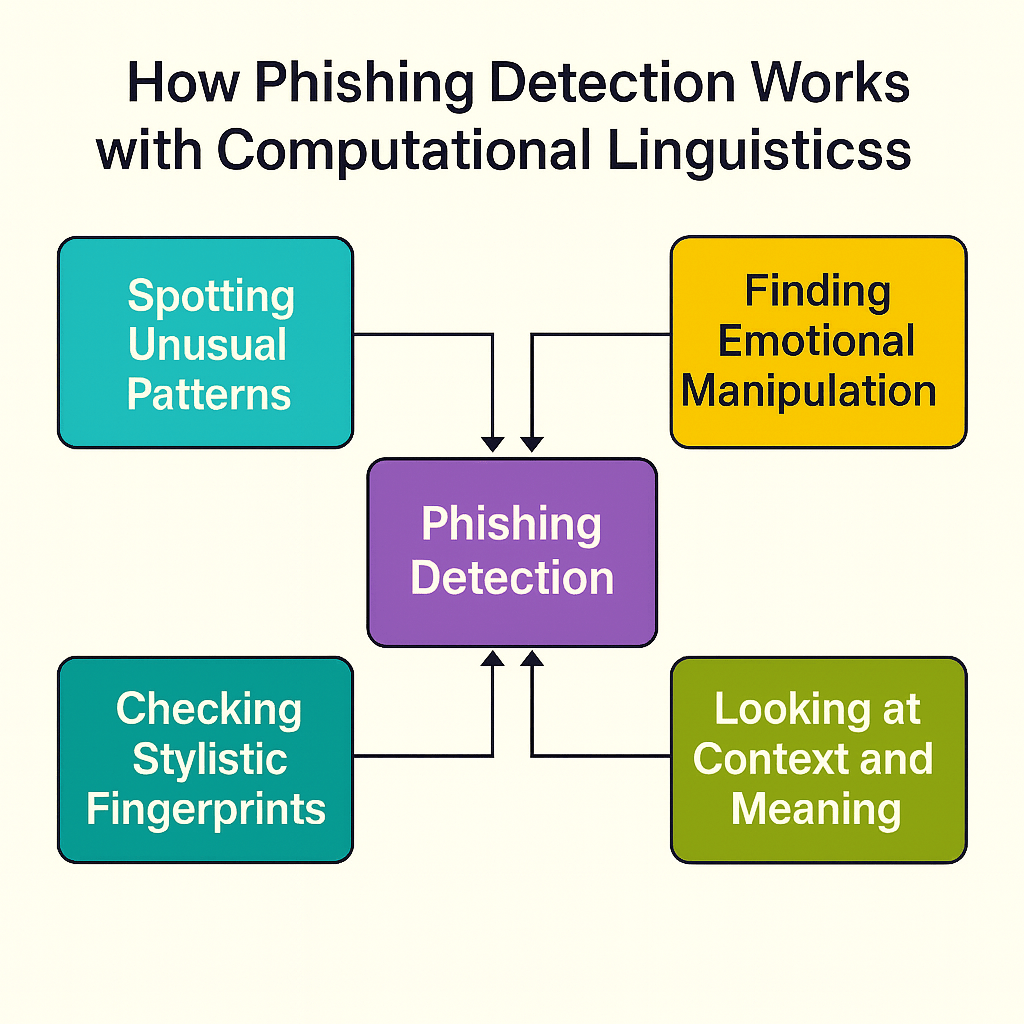

According to GPTZero, citation hallucinations tend to fall into several recurring patterns. One common issue is the combination or paraphrasing of titles, authors, or publication details from one or more real sources. Another is the outright fabrication of authors, titles, URLs, DOIs, or publication venues such as journals or conferences. A third pattern involves modifying real citations by extrapolating first names from initials, adding or dropping authors, or subtly paraphrasing titles in misleading ways. These kinds of errors are easy to overlook during review, particularly when the paper’s technical content appears sound.

The broader lesson here is not that AI tools should be avoided, but that they must be used carefully and responsibly. AI can be valuable for identifying research directions, generating questions, or helping navigate unfamiliar literature. It should not be relied on to generate final citations or to verify the existence of sources. For students in particular, it is important to build habits that prioritize checking references against trusted databases and original papers.

Looking ahead, this finding reinforces an idea that has repeatedly shaped how I approach my own work. Strong research is not defined by speed alone, but by care, verification, and reflection. As AI becomes more deeply embedded in academic workflows, learning how to use it responsibly will matter just as much as learning the technical skills themselves.

References

Shmatko, N., Adam, A., and Esau, P. GPTZero finds 100 new hallucinations in NeurIPS 2025 accepted papers, Jan. 21, 2026

Goldman, S. NeurIPS, one of the world’s top academic AI conferences, accepted research papers with 100+ AI-hallucinated citations, new report claims. Fortune, Jan 21, 2026

— Andrew

4,811 hits