I recently read an article on IEEE Spectrum titled “Large Language Models Are Improving Exponentially”. Here is a summary of its key points.

Benchmarking LLM Performance

Benchmarking large language models (LLMs) is challenging because their main goal is to produce text indistinguishable from human writing, which doesn’t always correlate with traditional processor performance metrics. However, it remains important to measure their progress to understand how much better LLMs are becoming over time and to estimate when they might complete substantial tasks independently.

METR’s Findings on Exponential Improvement

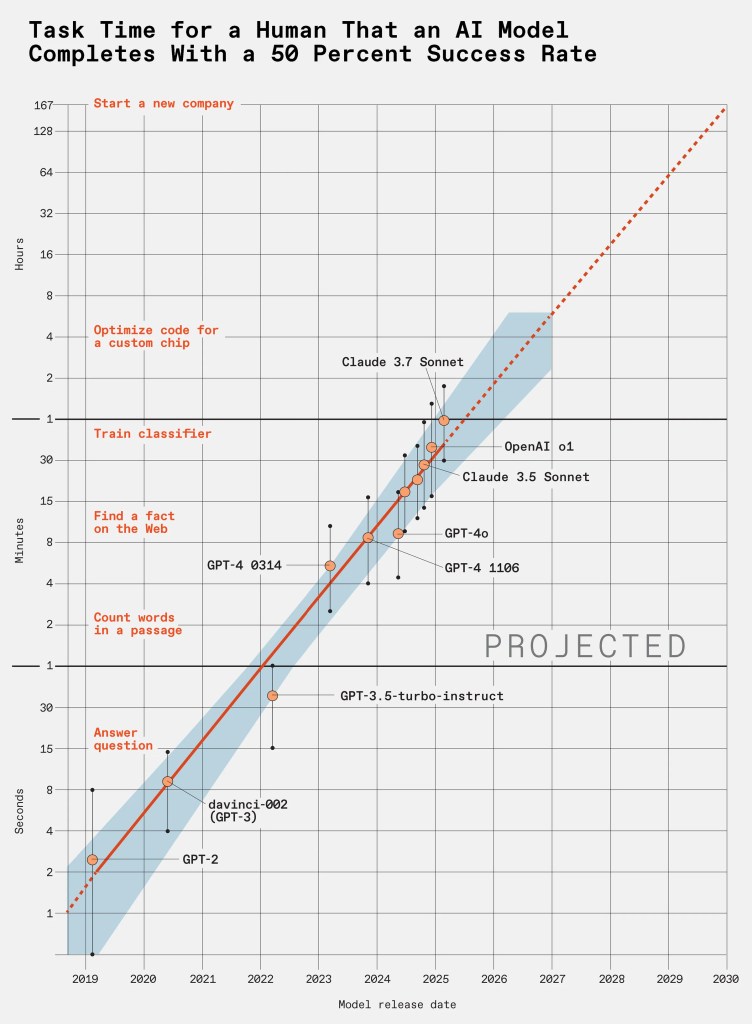

Researchers at Model Evaluation & Threat Research (METR) in Berkeley, California, published a paper in March called Measuring AI Ability to Complete Long Tasks. They concluded that:

- The capabilities of key LLMs are doubling every seven months.

- By 2030, the most advanced LLMs could complete, with 50 percent reliability, a software-based task that would take humans a full month of 40-hour workweeks.

- These LLMs might accomplish such tasks much faster than humans, possibly within days or even hours.

Potential Tasks by 2030

Tasks that LLMs might be able to perform by 2030 include:

- Starting up a company

- Writing a novel

- Greatly improving an existing LLM

According to AI researcher Zach Stein-Perlman, such capabilities would come with enormous stakes, involving both potential benefits and significant risks.

The Task-Completion Time Horizon Metric

At the core of METR’s work is a metric called “task-completion time horizon.” It measures the time it would take human programmers to complete a task that an LLM can complete with a specified reliability, such as 50 percent.

Their plots (see graphs below) show:

- Exponential growth in LLM capabilities with a doubling period of around seven months (Graph at the top).

- Tasks that are “messier” or more similar to real-world scenarios remain more challenging for LLMs (Graph at the bottom).

Caveats About Growth and Risks

While these results raise concerns about rapid AI advancement, METR researcher Megan Kinniment noted that:

- Rapid acceleration does not necessarily result in “massively explosive growth.”

- Progress could be slowed by factors such as hardware or robotics bottlenecks, even if AI systems become very advanced.

Final Summary

Overall, the article emphasizes that LLMs are improving exponentially, potentially enabling them to handle complex, month-long human tasks by 2030. This progress comes with significant benefits and risks, and its trajectory may depend on external factors like hardware limitations.

You can read the full article here.

— Andrew